Talking crap? Remember the Dekoda, the $600 (plus subscription fee) AI-infused camera that attaches to the inside of your toilet and photographs your stools? It seems that maker Kohler's claim that the data it captures is end-to-end encrypted may be a load of crap.

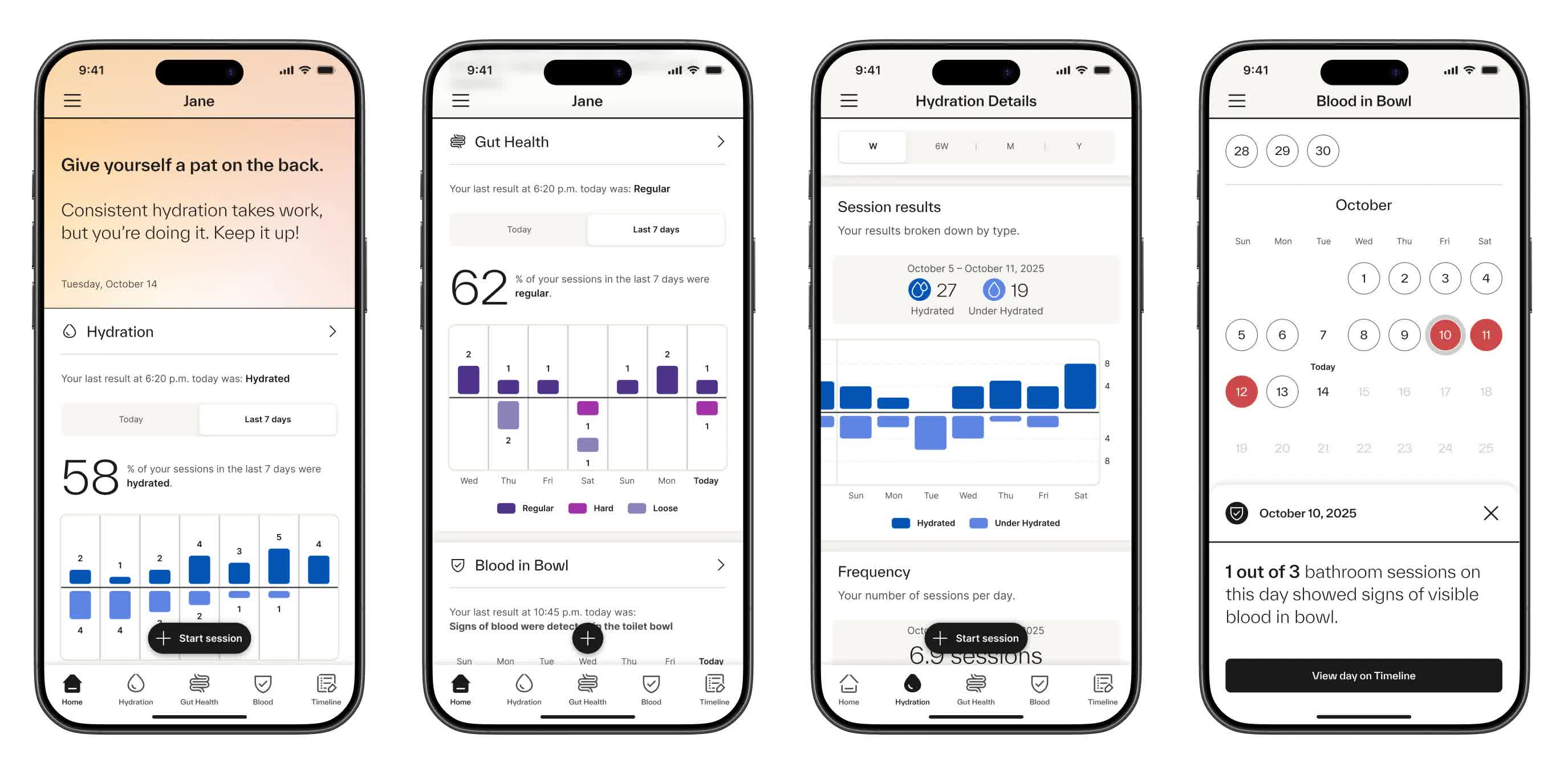

Kohler Health, the new division from home-products company Kohler, announced the Dekoda in October. The company says the images it captures, combined with its validated machine-learning algorithms, offer valuable insights into your health and wellness.

This sort of device is always going to raise privacy concerns, of course. Kohler assured users that the camera only points straight down toward the toilet's contents, so it shouldn't capture any low-hanging body parts.

Kohler also emphasized that all data collected by the device and companion app were "end-to-end encrypted" (E2EE). But researcher and former FTC technology advisor Simon Fondrie-Teitler was confused as to how E2EE, usually associated with messaging apps like Signal and WhatsApp, applied to a toilet camera. He also noted that Kohler Health doesn't have any user-to-user sharing features.

Fondrie-Teitler writes in his post that what Kohler is referring to as E2EE is likely just HTTPS (TLS) encryption between the app and its server, not true end-to-end encryption.

The researcher adds that emails exchanged with Kohler's privacy contact clarified that Kohler itself is able to decrypt user data. "User data is encrypted at rest, when it's stored on the user's mobile phone, toilet attachment, and on our systems. Data in transit is also encrypted end-to-end, as it travels between the user's devices and our systems, where it is decrypted and processed to provide our service."

Why would Kohler be interested in checking what you leave in the toilet bowl, aside from the stated health analysis? Its privacy policy says the company can use your data, and share it with third parties, to refine the Kohler Health Platform, improve its products, promote its business, and train its AI and machine-learning models.

The policy says that the data is anonymized, and that users have to consent if they want it used to train Kohler's AI.

The main issue here is the company's use of the term end-to-end encryption, which gives users a misleading sense of privacy. Kohler said in a statement that it used the term with respect to the encryption of data between its users (sender) and Kohler Health (recipient). So, it's secure rather than private, essentially.